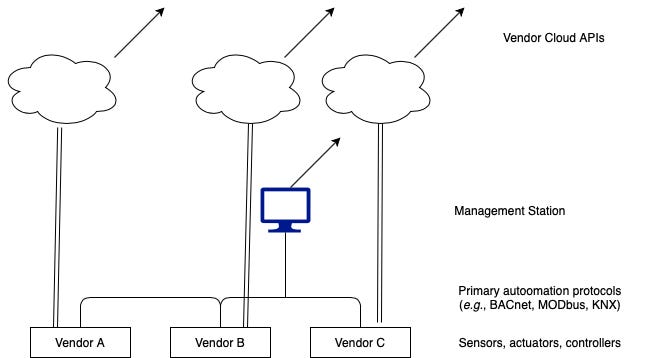

Vertical clouds: ultimate lock in

Architectural confusion

A long time ago, “interoperability” for automation and controls was actually pretty simple. Because systems usually weren’t networked, you just had to make sure that the vendor implemented a relatively simple standard like Modbus, HART, or even BACnet; as long as you had the data sheet and a competent controls tech, you could plug your shiny new controller into whatever other system you had with some arcane register-fu. Systems were interoperable (although not usually interchangeable): get tired of one vendor, and you could generally rip out one and reprogram your other controllers to talk to it.

Fast forward (a lot), to today. Most vendors of sensors and primary automation systems (motion controllers, thermostats, PLCs, etc) still rely on the same field-level protocols; but now also offer “cloud adaptors.” GE Current is one example of such a controller — but nearly every vendor now offers a service to connect the on premise offering to the cloud.

Down at the bottom, you’ve got lots of different systems — not really just controllers, but whole systems of sensors, actuators, and controllers. Each of them offer a connection to their own cloud, with (usually) user-friendly APIs on top.

Why Does Everyone Want Me to Buy a Cloud?

There are some obvious problems with this situation, some which are more obvious than others, but overall actually make dealing with this environment arguably worse than the old world where you just had a pile of BACnet or whatever. To get at the problems, we have to understand why people think cloud is a good idea in the first place.

From the vendor point of view, SaaS revenue is addictive, and because the customer never receives a license to the cloud portion of the software, highly predictable (good luck canceling). CFOs will wax poetic about CAC, LTV, ARR, and so on.

From a customer point of view, you get automatic updates (possibly a missed blessing); and “modern” technologies like REST and XML. However, I think the real potential “win” from integrating in the cloud is looking across systems. One would think that if you had lighting occupancy sensors it would be easy to tie them in so that if a room becomes occupied in the middle of the night, it fires a security alert; but of course it isn’t.

So vendors get predictable revenue, and customers get a (theoretically) easier job of building their applications.

There’s Got to be a Catch

Sure, big catch. Let’s start with the technical stuff. These mostly fall into a few areas:

Semantic gaps: or, “wtf is a room”? Despite the efforts of ASHRAE 223P, Brick, Haystack, Real Estate Core, BOMA, BEDES, IFC, and so on, pretty much none of the systems you can buy today use a standard data model and taxonomy; and if they do it’s optional; so unless the installer was extremely scrupulous about his conventions, it’s likely you have unreconcilable data copies everywhere. Digital twins (i.e., Microsoft’s or ThoughWire’s) are supposed to fix this but it’s a many-to-many problem; more on this later.

Multiple administrative domains: possibly less obvious, but each cloud is almost always owned and operated by separate entities. This means different failure modes, different security frameworks, different billing.

Multiple security models: building on the previous point, each vendor implements its own security model. One connects with OIDC, the other with a plain password. One lets you control access at the object level, the other at the site level. And so on. So applying any global policy is probably impossible.

Multiple technologies: the vendors usually implement something sensible — but all different. REST, XML-RPC, SOAP, gRPC, whatever.

We gotta fix this!

Of course, almost no one seems to achieve a nirvana, where systems share data easily and frictionlessly. In fact, it’s probably more accurate to say that man’s hubris in trying to achieve that nirvana actually comes closer to hell on earth. But I digress..

At the moment, practically every project I’ve seen that wants to achieve any sort of integration has been a slog, due to the need to reengineer existing systems and align data models; leaving aside other technically projects. However, learning starts at home so at this point the only solution is for buyers to be relatively sophisticated about what they are asking for. I think anyone contemplating a “digitization” or “digital twin” initiative should consider a few things:

What is the desired end state? Are there specific integrations or applications that are important, or is there a more general desire for future “open-ended” applications? This is important because collecting meta-data or ontologies and keeping them up to date is a potentially endless task easier to slim down if one can define when you are done.

What common data model should you adopt? You’re going to want to define one canonical “room” object type and so on; so better figure that out. 80/20 is the name of the game here; so pick one and get started.

Define three initial applications of the integration you are building. Whether meeting room management, factory yield or something else, you have to have something at the end of the project you can point to and say “success”!

Pick an integration architecture and solution.

Whatever you decide, push your requirements into vendor documents along with validation criteria. Make sure that when you are buying systems, the vendors know what is expected and that you have a way to check if they did it.